- What is flow typing?

- Why should I care?

- Design in context, or why you would and wouldn't want to implement this

- What can we do about this?

- Theory and practice of flow typing

Some programming languages have a feature known as flow typing. Flow typing narrows types based on control flow, and I believe it generally increases development productivity. In this cc I'll outline the behavior of flow typing, explain why it works well in some languages, would not work so well in others, and where flow typing can fit nicely elsewhere.

What is flow typing?

A type system observes flow typing when it refines values' types due to control flow. In this sense, flow typing avoids making you squint to see narrowed types. As a simple example in TypeScript,

tsViewsResponse = {views : number}|{error : string};declare functiongetViewsOf (route : string):ViewsResponse ;constviewsResp =getViewsOf ('/cc');if ("views" inviewsResp ) {console .log (`Had ${viewsResp .views } views`)} else {console .error (`Failed to get views: ${viewsResp .error }`)}

The type of viewsResp here is refined based on the

conditional branch taken, because entry into one branch means viewsResp must

be of a narrower type than the one getViewsOf was declared to return.

Why should I care?

Good question. I claim that because it makes programs stricter by enriching the state of types with the possible runtime state, flow typing makes software more expressive and its development safer.

Like everything else, there is some degree of pedantry here. But this is not an armchair exercise; I genuinely believe that where they can, languages should support this kind of type refinement. To motivate this further, let's walk through why TypeScript and MyPy adopted it, and look at examples in other languages where this kind of refinement would be useful.

TypeScript and MyPy

Both TypeScript and MyPy employ type systems that permit anonymous structural

unions. For example, the TypeScript type string|number is the type that

is inhabited by all values that inhabit either string or numberWith Python's type hints, this is roughly Union[str, int]..

Now, supporting such a type system is not a light choice from an implementer's perspective (we'll look at why below). But contextually, it makes sense why these type systems do so. TypeScript and Python's type hints were built for languages where the runtime shapes of values can vary arbitrarily. This means that if you want someone using JavaScript or unannotated Python to start using your typechecker, you have to meet them where their programs are. That may include being able to specify the type of a single value that might be a string, an integer, a dictionary with five keys, or a set. In time your users may refine their types, even enough so that they can be statically type checked, but you can't expect them to do so when they (or you) can't even yet express what their types are!

Nevertheless, it's almost useless to know something is a string|number at a

specific point.

Really what you want to know is, "is this value a string, or is it a

number?", and do some computation based on that result. It's nice when the type

system follows you when the question is answered on the result of some

condition, and so falls out flow typing!

The results of this typechecking have no runtime cost in TypeScript and Python. So barring the implementation and checking cost, the significant benefit in developer experience makes such a feature a huge win.

ML-family pattern matching

Alright, so here's how this fits elsewhere. First up is pattern-matching on ADTs a la Haskell, OCaml, etc. I'll just look at Rust here since that syntax will probably be the most familiar.

The case study here is the compiler for the Roc programming language. Here is a small portion of our LLVM codegen procedure for operator calls:

rustNumAdd |NumSub |NumMul /* ... */ |NumMulChecked => {debug_assert_eq !(args .len (), 2);let (lhs_arg ,lhs_layout ) =load_symbol_and_layout (args [0]);let (rhs_arg ,rhs_layout ) =load_symbol_and_layout (args [1]);build_num_binop (op ,lhs_arg ,lhs_layout ,rhs_arg ,rhs_layout )}NumBitwiseAnd |NumBitwiseOr |NumBitwiseXor => {debug_assert_eq !(args .len (), 2);let (lhs_arg ,lhs_layout ) =load_symbol_and_layout (args [0]);let (rhs_arg ,rhs_layout ) =load_symbol_and_layout (args [1]);debug_assert_eq !(lhs_layout ,rhs_layout );letint_width =intwidth_from_layout (lhs_layout );build_int_binop (op ,int_width ,lhs_arg ,rhs_arg )}// <later branches>// ...

In short, we're branching on some groups of operations, doing some intermediate

computation/validation, and then passing the operation to a continuation. Now

importantly, NumAdd and NumBitwiseAnd are both the same type - they are

variants of a sum LowLevel:

rustLowLevel {// ...NumAdd ,// ...NumBitwiseAnd ,// ...}

which is purely nominal, as sum types in most ML-family languages are. So the

type of op being passed to

build_num_binop and build_int_binop is the same, even though both of those

functions only need to worry about a subset of the variants in LowLevel! The

type system can't help these calls out, and so the functions enforce the values

they need to care about at runtime - indeed, both functions have a default case

of unreachable!() for the variants of LowLevel they aren't concerned with.

Wouldn't it be nice if instead, we had a way to say, "in this branch, op is

LowLevel::{NumBitwiseAnd|NumBitwiseOr|NumBitwiseXor}"?

A natural reaction is that the type of LowLevel is underspecified, and that

instead it should be arranged as something like

rustLowLevelAnyNum {NumAdd , /* ... */ }pub enumLowLevelAnyInt {NumBitwiseAnd , /* ... */ }pub enumLowLevel {Num (LowLevelAnyNum ),Int (LowLevelAnyInt ),// ...}

Which - I agree - in many cases is definitely the way you should structure these

types! But at the limit, you end up with an overspecified modelFor example, the Roc compiler has a generator that

produces a memory layout Layout for a type. We have a method

that takes a type we know is a number and gives us what we know is a

Layout::Int or Layout::Float. Should we group Int and Float in a nested Layout::Number variant?

Maybe, but then we also have

functions that only return or only operate on Ints, or

Ints and TagUnions, and so on.

It turns out that Layout is the common denominator, and operating in terms of

its top-level variants feels the most natural.

Doing so reduces boilerplate in moving

things in and out of a layout, and alleviates the cognitive load

(read: annoyance) that comes with that.

, which can lead to needlessly verbose software

and a rigid design. I don't think that's always

good software engineering. I want our tools should make software development easier,

and designs that are better for tools should only be considered if they are also

better for developers.

There is another problem here, which is that the reshaping of LowLevel in this

way takes an ADT that could be represented in a byte before (it has less than

256 variants) to something that requires at least two (one for the variant tag, e.g. Num, and one

for the payload, e.g. LowLevelAnyNum). A sufficiently smart compiler

could optimize this away to one byte, and maybe it doesn't even matter

in a world of 64-byte cache lines. But it's something we should at least

keep in the back of our minds.

Narrowing inheritance hierarchies

As a last example, I want to show where this has a role in object-oriented languages. Here's a sketch of a browser rendering engine:

java

Admittedly I am not a Java expert, but almost every enterprise Java codebase I've had to work with has observed this pattern of testing whether an object is an instance of particular subclass, and using that to drive further computation. Even in the presence of such tests, Java demands that the author cast their objects appropriately. Of course you can always introduce an intermediate variable after the test, but I argue that this is still too much - upon verifying the instance of an object, the type system should be smart enough to update the object's type appropriately!

C++ does alleviate redundancy here a little bit by permitting assignment within conditionals, so you can do something like

cpp

And the newly-scope variable root will be typed as StyledBox* inside the

branch - which is a lot nicer, but why do I have to introduce a new variable?

Actually, there are decent reasons, which we'll now get to.

Design in context, or why you would and wouldn't want to implement this

The thing with programming language design, like anything else, is that you can't design features in isolation. And, there are many levels to your features' interactions that must be considered and balanced.

Platitudes aside, I think this feature is "hard but worth it" for TypeScript, and "mostly doesn't fit" in the other languages we looked at. Let's look at why.

TypeScript and MyPy

We've already noted that flow typing provides a nice developer experience in these type systems, and often meets developers where they're at. A huge part of the TypeScript and MyPy type systems is having anonymous, structural union types, so this just fits naturally. There we go, a plus for flow typing!

As I mentioned, this is not the end of the story. The other big thing we have to consider here is the runtime semantics that this type-level feature introduces. In fact it doesn't change the runtime semantics at all! JavaScript and Python runtimes typically act on values uniformly or examine their shape at runtime. Moreover, TypeScript fully erases type information before lowering to JavaScript, and Python's type hints are not used by runtimes to determine value layoutsThe difference with Python is that type hints are not erased during program interpretation, so there is some cost to parsing and resolving symbols in type hints. For all intents and purposes this cost is negligible, though..

Bonus round: Implementing flow typing a-la TypeScript

Okay, bonus round - this is still not the end of the story. Turns out that implementing flow typing for anonymous structural unions in the presence of structural subtyping is a problem I would characterize as "hard". There are known techniques like normalizing to a canonical form and using polar type atoms to define subtyping, but at least for me, it doesn't feel like a walk in the park. I don't want to dive deep into the challenges hereIf you are interested in a thorough introduction to the context of this problem and algorithms for the kind of subtyping flow typing must employ, I highly recommend this tutorial-style paper by Castagna., but I'll lay out the high-level.

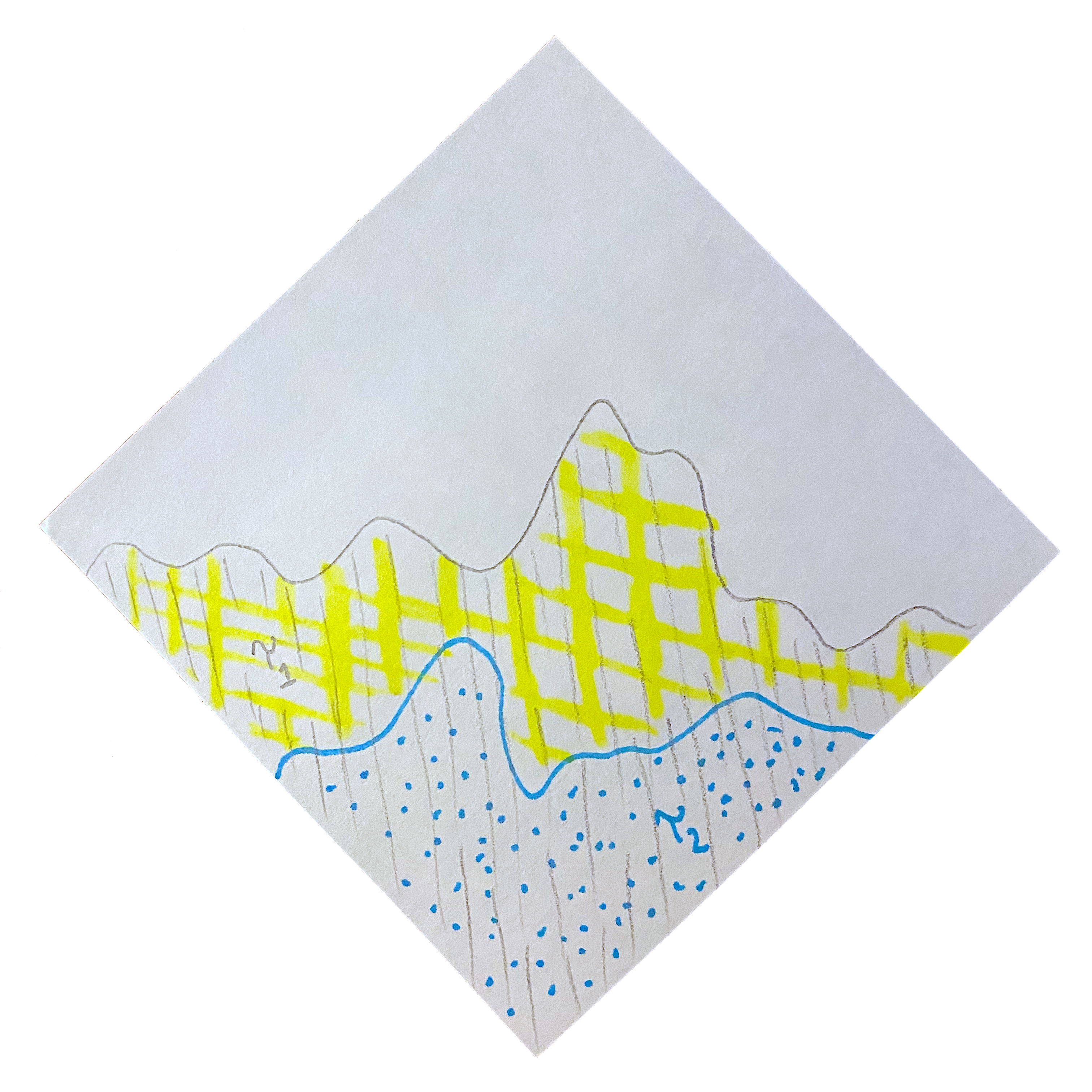

In a language like TypeScript where you can have arbitrary unions (and intersections), the space of types forms a lattice. We're going to wave our hands a bit here and severely abuse the definition, but this basically means you can take a square napkin, turn it 45 degrees, draw an arbitrary curve through the resulting diamond, and say everything below that line defines a type with a certain number of unions and intersections. Here's an example, of some funky types $\tau_1$ and $\tau_2$.

So let's say you have a value of type $\tau_1$, and in a positive branch you check whether it fits in $\tau_2$. To flow, the type system must be able to answer "does the curve $\tau_2$ lie underneath $\tau_1$?" And, in the negative branch, it must be able to answer "what does the region between them look like?"

Now a human or CV program could look at this image and say, "oh yeah, $\tau_2$ is definitely within $\tau_1$, and also here's what their difference looks like." But of course we don't represent types as pictures inside compilers, and we need a deterministic, efficient algorithm for this computation - which sounds fun! And maybe clever! And also hard!

ML-family languages

Flow typing doesn't work well with the designs of traditional ML-style languages. I'll outline two reasons I am aware of.

Inconsistencies with closed sum types

Most ML-style languages have ADTs that are nominal, not structural. This means that, for example in Rust, I can have two sum types

rustNumber {One ,OrMore }enumAge {One ,OrMore }

Where Number::One and Age::One are not the same type because their

qualified names are different, even though their

structure (and even generated code layout) are the same!

This is antithetical to flow typing, which more-or-less demands the ability to create anonymous sum types at any turn, which in turns demands that those sum types be typed structurallyYou can't meaningfully give a user-facing name to an anonymous type!.

Let's look back at a branch of the Rust example we motivated flow typing with earlier:

rustNumBitwiseAnd |NumBitwiseOr |NumBitwiseXor => {debug_assert_eq !(args .len (), 2);let (lhs_arg ,lhs_layout ) =load_symbol_and_layout (args [0]);let (rhs_arg ,rhs_layout ) =load_symbol_and_layout (args [1]);debug_assert_eq !(lhs_layout ,rhs_layout );letint_width = intwidth_from_layout(lhs_layout );build_int_binop (op ,int_width ,lhs_arg ,rhs_arg )}

Recall that I said it would be nice if we had a way to express, "in this branch, op

is LowLevel::{NumBitwiseAnd|NumBitwiseOr|NumBitwiseXor}." But I lied and

misled you! This is not nice at all! It would require a new syntactical model to

represent structural type unions, a whole new class of typing rules, and even a

model of variant polymorphism

so that I could call build_int_binop with a LowLevel::{NumBitwiseAnd} or

LowLevel::{NumBitwiseAnd|NumBitwiseOr|NumBitwiseXor}. Now, I'm not saying

these are bad features to have in the type system. But they are a huge addition

to the language, and induce a shift in the programming model - which is not

necessarily something you may want!

The same problems would be present in Haskell and OCaml - though, OCaml already has polymorphic variants, which are typed structurally. Why aren't those flow typed? I'm not sure, but my inclination is that OCaml's designers don't want to complicate type inference rules or the compilation model. Which brings us to the next point.

Complication of the compilation model

Another downside of flow typing is that languages which generate memory layouts

from types must perform implicit casts when types are narrowed. This is less

of a concern when a compiler generates uniform representations for all values,

or a language runtime has a system for dispatching common operations over

values with different memory layoutsAs JavaScript and

Python engines do.. But Rust's rustc compilerBasically the only one people use today

does not generate code in either of these ways.

Let's look again at the value op of our running example, which prior to a

particular branch has the type LowLevel. Now say it enters a branch and we'd

like to narrow the type of op to LowLevel::{NumBitwiseAnd|NumBitwiseOr|NumBitwiseXor},

which is the type expected by build_int_binop. Well, LowLevel and

LowLevel::{NumBitwiseAnd|NumBitwiseOr|NumBitwiseXor} may have different

memory layouts in the generated code! And even if they have the same broad

memory layout, how their variants are represented may differ. For example,

suppose both are represented as a byte. Even in this case, LowLevel, the

variant NumBitwiseAnd may be represented by the byte value 53, but in

LowLevel::{NumBitwiseAnd|NumBitwiseOr|NumBitwiseXor}, NumBitwiseAnd would be

represented by the byte value 0.

So, the compiler cannot consider these two types transparently, and must instead

insert an implicit cast to narrow op at either the branch or call siteThis problem is alleviated if build_int_binop accepts a narrowed type that

varies polymorphically, but then we incur a code-size cost due to

monomorphization.. We shouldn't expect such casts to be cheap in general.

The users of our language may not accept such a high implicit cost, and indeed,

we probably want to make high-cost operations explicit.

Actually, this is one area where I think C++'s model for narrowing class hierarchies is really nice. The code

cpp

which narrows root to StyledBox* from a superclass inside the branch is very

explicit about what it does - dynamic_cast incurs a runtime cost to verify the

legality of root's downcast. Yet, thanks to C++'s permission of name shadowing

in nested scopes and the syntactic sugar of assignment in if conditions, the

code is still very readable and doesn't feel crufty.

What can we do about this?

I still believe flow typing, in general, is a boon to software development, and I would like to see judicious use of it more often in languages' type systems.

But if we can't have flow typing in the presence of

- Nominal sum types

- Implicit casts

- A relatively-hard typechecker implementation (recall the bonus round)

when is it a good fit? Here's my pitch - in a ML-style language with structural polymorphic variants a la OCaml. Replace closed, nominal sum types entirely with structural ones and the problems of type consistencies and typechecker implementation are resolvedOf course, polymorphic variants have their own problems..

Roc is such an up-and-coming language, but that doesn't necessarily mean this feature is a good fit for Roc. For, we still need to deal with implicit casts. Here I think either

- A special syntax for branches that narrow, making any implicit casts strictly opt-in

- Uniform operations on values, maybe via a performant technique like Swift's witness tables (1, 2)

would work well. I have a prototype language that implements the former, but of course that's just a toy. In any case, there is merit here, and let's hope we see more flow typing across all kinds of language paradigms in the future!

Bonus round: why do polymorphic variants make flow typing easier to implement?

I claimed this was the case, and now I must justify it. Like before, I'll only provide a sketch.

Polymorphic variants are typically considered in the context of a Hindley-Milner (HM)-style type system, so we'll do that here too. In an HM type system, types don't form a lattice, and there is (usually) no notion of a subtyping operator. Instead, two types $\tau_1$ and $\tau_2$ are compatible if they "unify", denoted $\tau_1 \sim \tau_2$, which roughly corresponds to equivalence modulo instantiation of type variables. A type variable, like $\alpha$ in the type of the identity function $\tt{id}\co\alpha\to\alpha$, is universally quantified (polymorphic) and must be instantiated to be compared to a concrete type.

What this means is that when two structural types are compared, they are always compared by width and constructor. That means that a structural type union, composed of some variants, can only ever be compared to other structural type unions, and moreover two such unions unify only in the way described above.

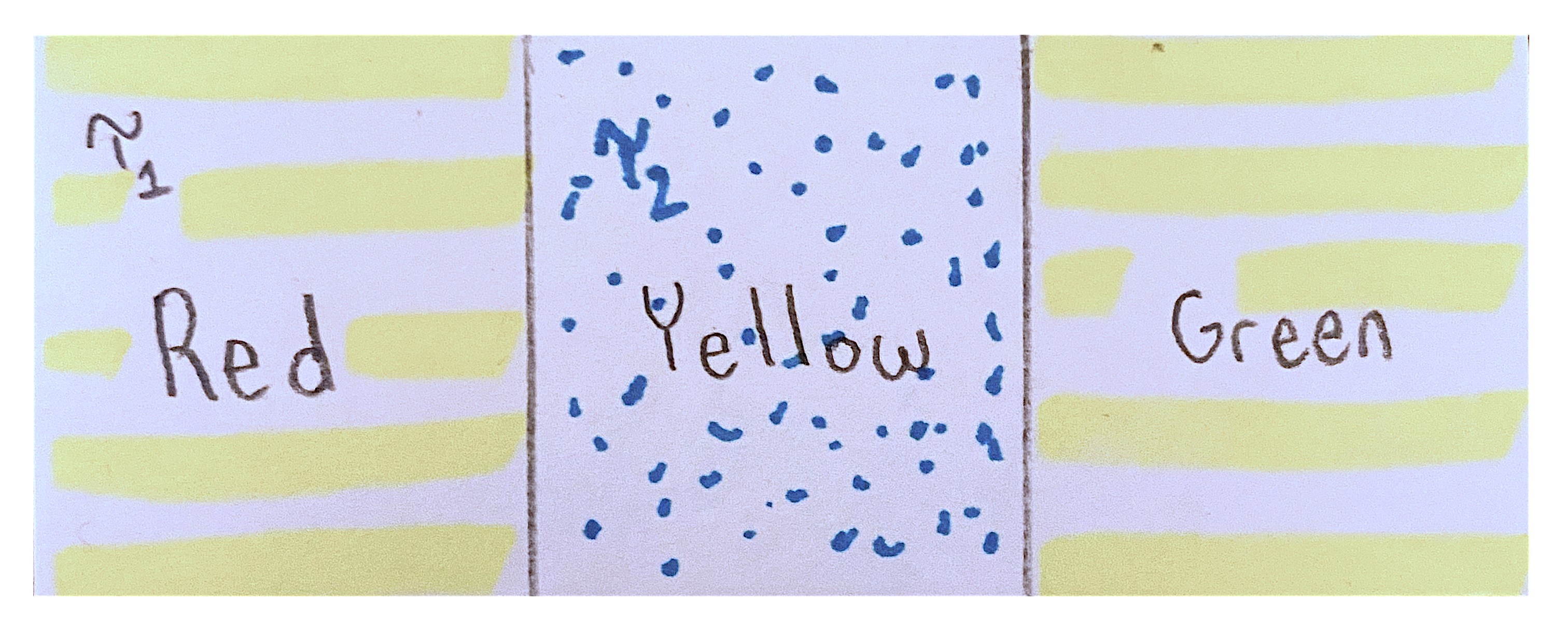

So suppose a have a value light of structural union type Red|Yellow|Green,

and the branching

match light with # light is Red|Yellow|Green here Yellow => do_yellow light # light is Yellow here _ => do_other light # light is Red|Green here

In the first branch, we need to do a set intersection to compute the narrowed type, and in the second branch we must compute a set difference from the previous branches. The difference is that unlike in the case of subtyping, where we had a proper two-dimensional lattice, we now only need to operate on one dimension, because our types are only compared by structural width.

That looks a lot easier to design algorithms for!

Theory and practice of flow typing

I've written about flow typing theory and implementation before (1, 2). Jake Donham has a nice blog series that builds up a type checker a la TypeScript's (their blog posts include a sweet visualization tool of what the checker is doing). And of course, there is no shortage of literature on flow typing (see 1, 2 for starters).